Dynamic Boltzmann Machines

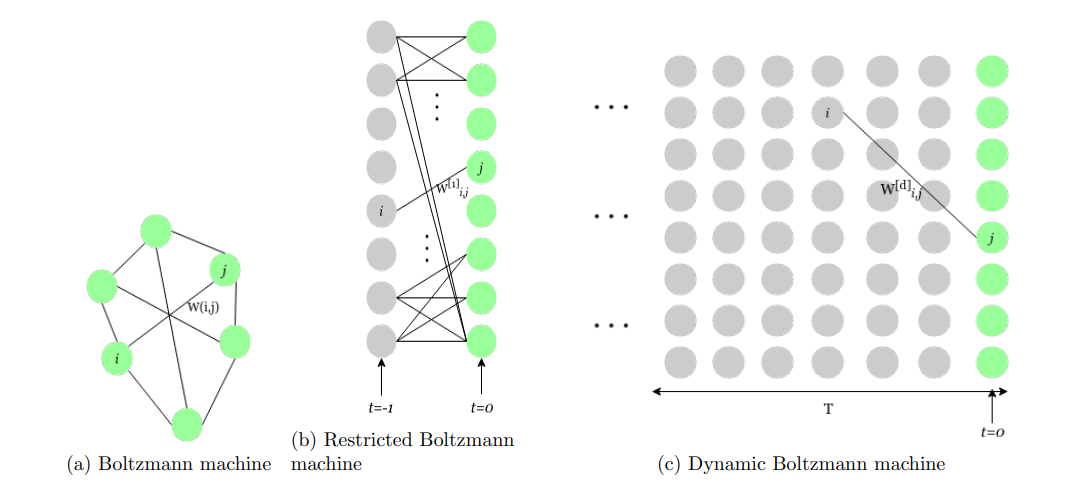

Dynamic Boltzmann Machine (DyBM) was proposed by Osogami at al. in 2015 when their team at IBM, Tokyo showed how a network of just 7 neurons could memorize a sequence of letters of alphabet1. Inspired from this work, we set out to explore DyBM and realize its usefulness in boosting performance in time-series prediction related tasks.

The Dynamic Boltzmann machine beautifully incorporates the notion of time in the existing framework of Boltzmann machines. It consists of infinite layers of neurons unfolded in time. There are no neuronal connections in space, the only connections between neurons are in time.

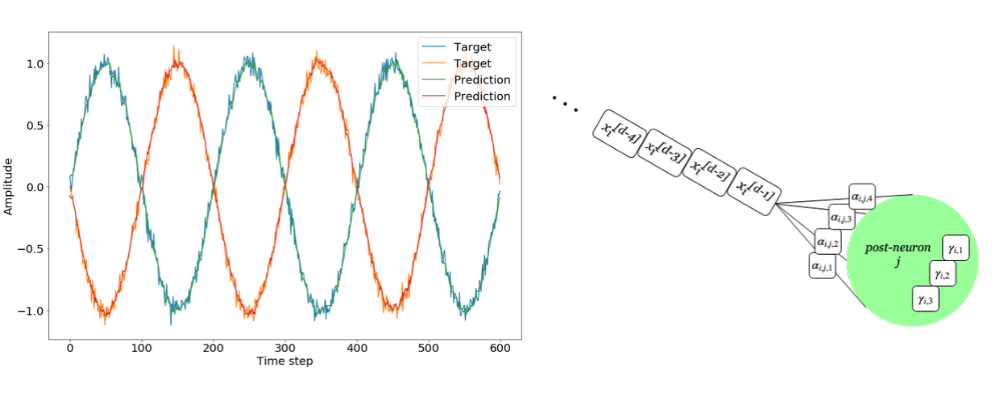

DyBM is essentially an energy-minimization model. The most interesting aspect of DyBM is its interpretation in terms of biological neuronal networks. A DyBM consists of a network of neurons and memory units. Between two neurons, we have two separate uni-directional FIFO queues storing the past states of the pre-synaptic neuron. Also, each neuron has the memory unit for storing neural eligibility traces, which summarize the neurons activities in the past. A synaptic eligibility trace is associated with a synapse between a pre-synaptic neuron and a post-synaptic neuron, and summarizes the spikes that have arrived at the synapse, via the FIFO queue, from the pre-synaptic neuron.

Realization of DyBM is hardware is a promising direction for research attributed to the advent of SNN-based chips like Intel’s Loihi 2 and IBM’s Truenorth. We were able to come up with an initial framework for hardware realisation of DyBM.

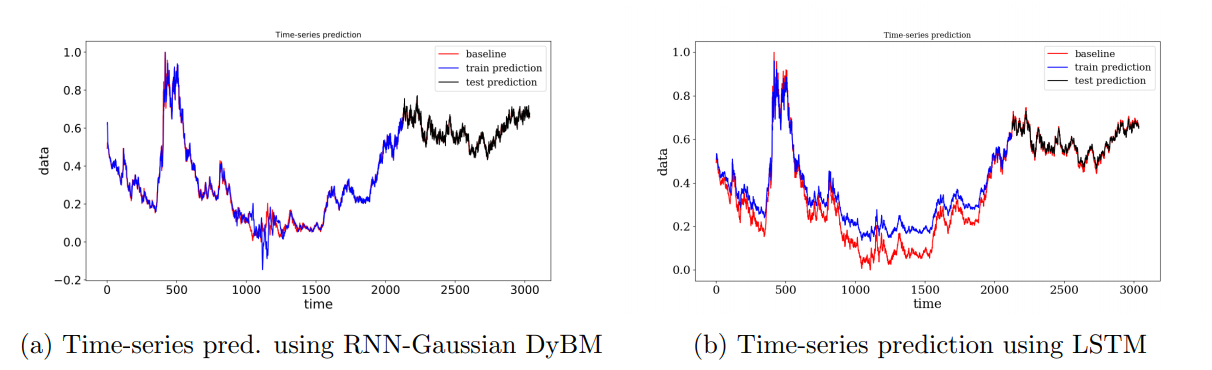

To validate the results of DyBM on a real life time-series, we used RNN Gaussian DyBM to predict a variable in different tasks. We use LSTM networks as a benchmark to compare the results of DyBM since LSTMs have recently emerged as the state-of-the-art model for time-series prediction. We observe that the DyBM model occasionally outperforms the results of LSTM. We compare the results using RMSE as a metric.